- Privacy Policy

Home » Scientific Research – Types, Purpose and Guide

Scientific Research – Types, Purpose and Guide

Table of Contents

Scientific Research

Definition:

Scientific research is the systematic and empirical investigation of phenomena, theories, or hypotheses, using various methods and techniques in order to acquire new knowledge or to validate existing knowledge.

It involves the collection, analysis, interpretation, and presentation of data, as well as the formulation and testing of hypotheses. Scientific research can be conducted in various fields, such as natural sciences, social sciences, and engineering, and may involve experiments, observations, surveys, or other forms of data collection. The goal of scientific research is to advance knowledge, improve understanding, and contribute to the development of solutions to practical problems.

Types of Scientific Research

There are different types of scientific research, which can be classified based on their purpose, method, and application. In this response, we will discuss the four main types of scientific research.

Descriptive Research

Descriptive research aims to describe or document a particular phenomenon or situation, without altering it in any way. This type of research is usually done through observation, surveys, or case studies. Descriptive research is useful in generating ideas, understanding complex phenomena, and providing a foundation for future research. However, it does not provide explanations or causal relationships between variables.

Exploratory Research

Exploratory research aims to explore a new area of inquiry or develop initial ideas for future research. This type of research is usually conducted through observation, interviews, or focus groups. Exploratory research is useful in generating hypotheses, identifying research questions, and determining the feasibility of a larger study. However, it does not provide conclusive evidence or establish cause-and-effect relationships.

Experimental Research

Experimental research aims to test cause-and-effect relationships between variables by manipulating one variable and observing the effects on another variable. This type of research involves the use of an experimental group, which receives a treatment, and a control group, which does not receive the treatment. Experimental research is useful in establishing causal relationships, replicating results, and controlling extraneous variables. However, it may not be feasible or ethical to manipulate certain variables in some contexts.

Correlational Research

Correlational research aims to examine the relationship between two or more variables without manipulating them. This type of research involves the use of statistical techniques to determine the strength and direction of the relationship between variables. Correlational research is useful in identifying patterns, predicting outcomes, and testing theories. However, it does not establish causation or control for confounding variables.

Scientific Research Methods

Scientific research methods are used in scientific research to investigate phenomena, acquire knowledge, and answer questions using empirical evidence. Here are some commonly used scientific research methods:

Observational Studies

This method involves observing and recording phenomena as they occur in their natural setting. It can be done through direct observation or by using tools such as cameras, microscopes, or sensors.

Experimental Studies

This method involves manipulating one or more variables to determine the effect on the outcome. This type of study is often used to establish cause-and-effect relationships.

Survey Research

This method involves collecting data from a large number of people by asking them a set of standardized questions. Surveys can be conducted in person, over the phone, or online.

Case Studies

This method involves in-depth analysis of a single individual, group, or organization. Case studies are often used to gain insights into complex or unusual phenomena.

Meta-analysis

This method involves combining data from multiple studies to arrive at a more reliable conclusion. This technique can be used to identify patterns and trends across a large number of studies.

Qualitative Research

This method involves collecting and analyzing non-numerical data, such as interviews, focus groups, or observations. This type of research is often used to explore complex phenomena and to gain an understanding of people’s experiences and perspectives.

Quantitative Research

This method involves collecting and analyzing numerical data using statistical techniques. This type of research is often used to test hypotheses and to establish cause-and-effect relationships.

Longitudinal Studies

This method involves following a group of individuals over a period of time to observe changes and to identify patterns and trends. This type of study can be used to investigate the long-term effects of a particular intervention or exposure.

Data Analysis Methods

There are many different data analysis methods used in scientific research, and the choice of method depends on the type of data being collected and the research question. Here are some commonly used data analysis methods:

- Descriptive statistics: This involves using summary statistics such as mean, median, mode, standard deviation, and range to describe the basic features of the data.

- Inferential statistics: This involves using statistical tests to make inferences about a population based on a sample of data. Examples of inferential statistics include t-tests, ANOVA, and regression analysis.

- Qualitative analysis: This involves analyzing non-numerical data such as interviews, focus groups, and observations. Qualitative analysis may involve identifying themes, patterns, or categories in the data.

- Content analysis: This involves analyzing the content of written or visual materials such as articles, speeches, or images. Content analysis may involve identifying themes, patterns, or categories in the content.

- Data mining: This involves using automated methods to analyze large datasets to identify patterns, trends, or relationships in the data.

- Machine learning: This involves using algorithms to analyze data and make predictions or classifications based on the patterns identified in the data.

Application of Scientific Research

Scientific research has numerous applications in many fields, including:

- Medicine and healthcare: Scientific research is used to develop new drugs, medical treatments, and vaccines. It is also used to understand the causes and risk factors of diseases, as well as to develop new diagnostic tools and medical devices.

- Agriculture : Scientific research is used to develop new crop varieties, to improve crop yields, and to develop more sustainable farming practices.

- Technology and engineering : Scientific research is used to develop new technologies and engineering solutions, such as renewable energy systems, new materials, and advanced manufacturing techniques.

- Environmental science : Scientific research is used to understand the impacts of human activity on the environment and to develop solutions for mitigating those impacts. It is also used to monitor and manage natural resources, such as water and air quality.

- Education : Scientific research is used to develop new teaching methods and educational materials, as well as to understand how people learn and develop.

- Business and economics: Scientific research is used to understand consumer behavior, to develop new products and services, and to analyze economic trends and policies.

- Social sciences : Scientific research is used to understand human behavior, attitudes, and social dynamics. It is also used to develop interventions to improve social welfare and to inform public policy.

How to Conduct Scientific Research

Conducting scientific research involves several steps, including:

- Identify a research question: Start by identifying a question or problem that you want to investigate. This question should be clear, specific, and relevant to your field of study.

- Conduct a literature review: Before starting your research, conduct a thorough review of existing research in your field. This will help you identify gaps in knowledge and develop hypotheses or research questions.

- Develop a research plan: Once you have a research question, develop a plan for how you will collect and analyze data to answer that question. This plan should include a detailed methodology, a timeline, and a budget.

- Collect data: Depending on your research question and methodology, you may collect data through surveys, experiments, observations, or other methods.

- Analyze data: Once you have collected your data, analyze it using appropriate statistical or qualitative methods. This will help you draw conclusions about your research question.

- Interpret results: Based on your analysis, interpret your results and draw conclusions about your research question. Discuss any limitations or implications of your findings.

- Communicate results: Finally, communicate your findings to others in your field through presentations, publications, or other means.

Purpose of Scientific Research

The purpose of scientific research is to systematically investigate phenomena, acquire new knowledge, and advance our understanding of the world around us. Scientific research has several key goals, including:

- Exploring the unknown: Scientific research is often driven by curiosity and the desire to explore uncharted territory. Scientists investigate phenomena that are not well understood, in order to discover new insights and develop new theories.

- Testing hypotheses: Scientific research involves developing hypotheses or research questions, and then testing them through observation and experimentation. This allows scientists to evaluate the validity of their ideas and refine their understanding of the phenomena they are studying.

- Solving problems: Scientific research is often motivated by the desire to solve practical problems or address real-world challenges. For example, researchers may investigate the causes of a disease in order to develop new treatments, or explore ways to make renewable energy more affordable and accessible.

- Advancing knowledge: Scientific research is a collective effort to advance our understanding of the world around us. By building on existing knowledge and developing new insights, scientists contribute to a growing body of knowledge that can be used to inform decision-making, solve problems, and improve our lives.

Examples of Scientific Research

Here are some examples of scientific research that are currently ongoing or have recently been completed:

- Clinical trials for new treatments: Scientific research in the medical field often involves clinical trials to test new treatments for diseases and conditions. For example, clinical trials may be conducted to evaluate the safety and efficacy of new drugs or medical devices.

- Genomics research: Scientists are conducting research to better understand the human genome and its role in health and disease. This includes research on genetic mutations that can cause diseases such as cancer, as well as the development of personalized medicine based on an individual’s genetic makeup.

- Climate change: Scientific research is being conducted to understand the causes and impacts of climate change, as well as to develop solutions for mitigating its effects. This includes research on renewable energy technologies, carbon capture and storage, and sustainable land use practices.

- Neuroscience : Scientists are conducting research to understand the workings of the brain and the nervous system, with the goal of developing new treatments for neurological disorders such as Alzheimer’s disease and Parkinson’s disease.

- Artificial intelligence: Researchers are working to develop new algorithms and technologies to improve the capabilities of artificial intelligence systems. This includes research on machine learning, computer vision, and natural language processing.

- Space exploration: Scientific research is being conducted to explore the cosmos and learn more about the origins of the universe. This includes research on exoplanets, black holes, and the search for extraterrestrial life.

When to use Scientific Research

Some specific situations where scientific research may be particularly useful include:

- Solving problems: Scientific research can be used to investigate practical problems or address real-world challenges. For example, scientists may investigate the causes of a disease in order to develop new treatments, or explore ways to make renewable energy more affordable and accessible.

- Decision-making: Scientific research can provide evidence-based information to inform decision-making. For example, policymakers may use scientific research to evaluate the effectiveness of different policy options or to make decisions about public health and safety.

- Innovation : Scientific research can be used to develop new technologies, products, and processes. For example, research on materials science can lead to the development of new materials with unique properties that can be used in a range of applications.

- Knowledge creation : Scientific research is an important way of generating new knowledge and advancing our understanding of the world around us. This can lead to new theories, insights, and discoveries that can benefit society.

Advantages of Scientific Research

There are many advantages of scientific research, including:

- Improved understanding : Scientific research allows us to gain a deeper understanding of the world around us, from the smallest subatomic particles to the largest celestial bodies.

- Evidence-based decision making: Scientific research provides evidence-based information that can inform decision-making in many fields, from public policy to medicine.

- Technological advancements: Scientific research drives technological advancements in fields such as medicine, engineering, and materials science. These advancements can improve quality of life, increase efficiency, and reduce costs.

- New discoveries: Scientific research can lead to new discoveries and breakthroughs that can advance our knowledge in many fields. These discoveries can lead to new theories, technologies, and products.

- Economic benefits : Scientific research can stimulate economic growth by creating new industries and jobs, and by generating new technologies and products.

- Improved health outcomes: Scientific research can lead to the development of new medical treatments and technologies that can improve health outcomes and quality of life for people around the world.

- Increased innovation: Scientific research encourages innovation by promoting collaboration, creativity, and curiosity. This can lead to new and unexpected discoveries that can benefit society.

Limitations of Scientific Research

Scientific research has some limitations that researchers should be aware of. These limitations can include:

- Research design limitations : The design of a research study can impact the reliability and validity of the results. Poorly designed studies can lead to inaccurate or inconclusive results. Researchers must carefully consider the study design to ensure that it is appropriate for the research question and the population being studied.

- Sample size limitations: The size of the sample being studied can impact the generalizability of the results. Small sample sizes may not be representative of the larger population, and may lead to incorrect conclusions.

- Time and resource limitations: Scientific research can be costly and time-consuming. Researchers may not have the resources necessary to conduct a large-scale study, or may not have sufficient time to complete a study with appropriate controls and analysis.

- Ethical limitations : Certain types of research may raise ethical concerns, such as studies involving human or animal subjects. Ethical concerns may limit the scope of the research that can be conducted, or require additional protocols and procedures to ensure the safety and well-being of participants.

- Limitations of technology: Technology may limit the types of research that can be conducted, or the accuracy of the data collected. For example, certain types of research may require advanced technology that is not yet available, or may be limited by the accuracy of current measurement tools.

- Limitations of existing knowledge: Existing knowledge may limit the types of research that can be conducted. For example, if there is limited knowledge in a particular field, it may be difficult to design a study that can provide meaningful results.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Original Research – Definition, Examples, Guide

Historical Research – Types, Methods and Examples

Documentary Research – Types, Methods and...

Artistic Research – Methods, Types and Examples

Humanities Research – Types, Methods and Examples

What Is Research, and Why Do People Do It?

- Open Access

- First Online: 03 December 2022

Cite this chapter

You have full access to this open access chapter

- James Hiebert 6 ,

- Jinfa Cai 7 ,

- Stephen Hwang 7 ,

- Anne K Morris 6 &

- Charles Hohensee 6

Part of the book series: Research in Mathematics Education ((RME))

22k Accesses

Abstractspiepr Abs1

Every day people do research as they gather information to learn about something of interest. In the scientific world, however, research means something different than simply gathering information. Scientific research is characterized by its careful planning and observing, by its relentless efforts to understand and explain, and by its commitment to learn from everyone else seriously engaged in research. We call this kind of research scientific inquiry and define it as “formulating, testing, and revising hypotheses.” By “hypotheses” we do not mean the hypotheses you encounter in statistics courses. We mean predictions about what you expect to find and rationales for why you made these predictions. Throughout this and the remaining chapters we make clear that the process of scientific inquiry applies to all kinds of research studies and data, both qualitative and quantitative.

You have full access to this open access chapter, Download chapter PDF

Part I. What Is Research?

Have you ever studied something carefully because you wanted to know more about it? Maybe you wanted to know more about your grandmother’s life when she was younger so you asked her to tell you stories from her childhood, or maybe you wanted to know more about a fertilizer you were about to use in your garden so you read the ingredients on the package and looked them up online. According to the dictionary definition, you were doing research.

Recall your high school assignments asking you to “research” a topic. The assignment likely included consulting a variety of sources that discussed the topic, perhaps including some “original” sources. Often, the teacher referred to your product as a “research paper.”

Were you conducting research when you interviewed your grandmother or wrote high school papers reviewing a particular topic? Our view is that you were engaged in part of the research process, but only a small part. In this book, we reserve the word “research” for what it means in the scientific world, that is, for scientific research or, more pointedly, for scientific inquiry .

Exercise 1.1

Before you read any further, write a definition of what you think scientific inquiry is. Keep it short—Two to three sentences. You will periodically update this definition as you read this chapter and the remainder of the book.

This book is about scientific inquiry—what it is and how to do it. For starters, scientific inquiry is a process, a particular way of finding out about something that involves a number of phases. Each phase of the process constitutes one aspect of scientific inquiry. You are doing scientific inquiry as you engage in each phase, but you have not done scientific inquiry until you complete the full process. Each phase is necessary but not sufficient.

In this chapter, we set the stage by defining scientific inquiry—describing what it is and what it is not—and by discussing what it is good for and why people do it. The remaining chapters build directly on the ideas presented in this chapter.

A first thing to know is that scientific inquiry is not all or nothing. “Scientificness” is a continuum. Inquiries can be more scientific or less scientific. What makes an inquiry more scientific? You might be surprised there is no universally agreed upon answer to this question. None of the descriptors we know of are sufficient by themselves to define scientific inquiry. But all of them give you a way of thinking about some aspects of the process of scientific inquiry. Each one gives you different insights.

Exercise 1.2

As you read about each descriptor below, think about what would make an inquiry more or less scientific. If you think a descriptor is important, use it to revise your definition of scientific inquiry.

Creating an Image of Scientific Inquiry

We will present three descriptors of scientific inquiry. Each provides a different perspective and emphasizes a different aspect of scientific inquiry. We will draw on all three descriptors to compose our definition of scientific inquiry.

Descriptor 1. Experience Carefully Planned in Advance

Sir Ronald Fisher, often called the father of modern statistical design, once referred to research as “experience carefully planned in advance” (1935, p. 8). He said that humans are always learning from experience, from interacting with the world around them. Usually, this learning is haphazard rather than the result of a deliberate process carried out over an extended period of time. Research, Fisher said, was learning from experience, but experience carefully planned in advance.

This phrase can be fully appreciated by looking at each word. The fact that scientific inquiry is based on experience means that it is based on interacting with the world. These interactions could be thought of as the stuff of scientific inquiry. In addition, it is not just any experience that counts. The experience must be carefully planned . The interactions with the world must be conducted with an explicit, describable purpose, and steps must be taken to make the intended learning as likely as possible. This planning is an integral part of scientific inquiry; it is not just a preparation phase. It is one of the things that distinguishes scientific inquiry from many everyday learning experiences. Finally, these steps must be taken beforehand and the purpose of the inquiry must be articulated in advance of the experience. Clearly, scientific inquiry does not happen by accident, by just stumbling into something. Stumbling into something unexpected and interesting can happen while engaged in scientific inquiry, but learning does not depend on it and serendipity does not make the inquiry scientific.

Descriptor 2. Observing Something and Trying to Explain Why It Is the Way It Is

When we were writing this chapter and googled “scientific inquiry,” the first entry was: “Scientific inquiry refers to the diverse ways in which scientists study the natural world and propose explanations based on the evidence derived from their work.” The emphasis is on studying, or observing, and then explaining . This descriptor takes the image of scientific inquiry beyond carefully planned experience and includes explaining what was experienced.

According to the Merriam-Webster dictionary, “explain” means “(a) to make known, (b) to make plain or understandable, (c) to give the reason or cause of, and (d) to show the logical development or relations of” (Merriam-Webster, n.d. ). We will use all these definitions. Taken together, they suggest that to explain an observation means to understand it by finding reasons (or causes) for why it is as it is. In this sense of scientific inquiry, the following are synonyms: explaining why, understanding why, and reasoning about causes and effects. Our image of scientific inquiry now includes planning, observing, and explaining why.

We need to add a final note about this descriptor. We have phrased it in a way that suggests “observing something” means you are observing something in real time—observing the way things are or the way things are changing. This is often true. But, observing could mean observing data that already have been collected, maybe by someone else making the original observations (e.g., secondary analysis of NAEP data or analysis of existing video recordings of classroom instruction). We will address secondary analyses more fully in Chap. 4 . For now, what is important is that the process requires explaining why the data look like they do.

We must note that for us, the term “data” is not limited to numerical or quantitative data such as test scores. Data can also take many nonquantitative forms, including written survey responses, interview transcripts, journal entries, video recordings of students, teachers, and classrooms, text messages, and so forth.

Exercise 1.3

What are the implications of the statement that just “observing” is not enough to count as scientific inquiry? Does this mean that a detailed description of a phenomenon is not scientific inquiry?

Find sources that define research in education that differ with our position, that say description alone, without explanation, counts as scientific research. Identify the precise points where the opinions differ. What are the best arguments for each of the positions? Which do you prefer? Why?

Descriptor 3. Updating Everyone’s Thinking in Response to More and Better Information

This descriptor focuses on a third aspect of scientific inquiry: updating and advancing the field’s understanding of phenomena that are investigated. This descriptor foregrounds a powerful characteristic of scientific inquiry: the reliability (or trustworthiness) of what is learned and the ultimate inevitability of this learning to advance human understanding of phenomena. Humans might choose not to learn from scientific inquiry, but history suggests that scientific inquiry always has the potential to advance understanding and that, eventually, humans take advantage of these new understandings.

Before exploring these bold claims a bit further, note that this descriptor uses “information” in the same way the previous two descriptors used “experience” and “observations.” These are the stuff of scientific inquiry and we will use them often, sometimes interchangeably. Frequently, we will use the term “data” to stand for all these terms.

An overriding goal of scientific inquiry is for everyone to learn from what one scientist does. Much of this book is about the methods you need to use so others have faith in what you report and can learn the same things you learned. This aspect of scientific inquiry has many implications.

One implication is that scientific inquiry is not a private practice. It is a public practice available for others to see and learn from. Notice how different this is from everyday learning. When you happen to learn something from your everyday experience, often only you gain from the experience. The fact that research is a public practice means it is also a social one. It is best conducted by interacting with others along the way: soliciting feedback at each phase, taking opportunities to present work-in-progress, and benefitting from the advice of others.

A second implication is that you, as the researcher, must be committed to sharing what you are doing and what you are learning in an open and transparent way. This allows all phases of your work to be scrutinized and critiqued. This is what gives your work credibility. The reliability or trustworthiness of your findings depends on your colleagues recognizing that you have used all appropriate methods to maximize the chances that your claims are justified by the data.

A third implication of viewing scientific inquiry as a collective enterprise is the reverse of the second—you must be committed to receiving comments from others. You must treat your colleagues as fair and honest critics even though it might sometimes feel otherwise. You must appreciate their job, which is to remain skeptical while scrutinizing what you have done in considerable detail. To provide the best help to you, they must remain skeptical about your conclusions (when, for example, the data are difficult for them to interpret) until you offer a convincing logical argument based on the information you share. A rather harsh but good-to-remember statement of the role of your friendly critics was voiced by Karl Popper, a well-known twentieth century philosopher of science: “. . . if you are interested in the problem which I tried to solve by my tentative assertion, you may help me by criticizing it as severely as you can” (Popper, 1968, p. 27).

A final implication of this third descriptor is that, as someone engaged in scientific inquiry, you have no choice but to update your thinking when the data support a different conclusion. This applies to your own data as well as to those of others. When data clearly point to a specific claim, even one that is quite different than you expected, you must reconsider your position. If the outcome is replicated multiple times, you need to adjust your thinking accordingly. Scientific inquiry does not let you pick and choose which data to believe; it mandates that everyone update their thinking when the data warrant an update.

Doing Scientific Inquiry

We define scientific inquiry in an operational sense—what does it mean to do scientific inquiry? What kind of process would satisfy all three descriptors: carefully planning an experience in advance; observing and trying to explain what you see; and, contributing to updating everyone’s thinking about an important phenomenon?

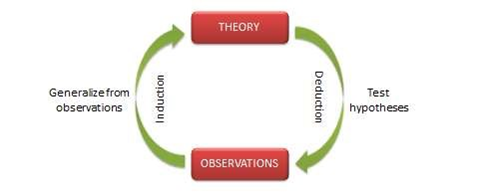

We define scientific inquiry as formulating , testing , and revising hypotheses about phenomena of interest.

Of course, we are not the only ones who define it in this way. The definition for the scientific method posted by the editors of Britannica is: “a researcher develops a hypothesis, tests it through various means, and then modifies the hypothesis on the basis of the outcome of the tests and experiments” (Britannica, n.d. ).

Notice how defining scientific inquiry this way satisfies each of the descriptors. “Carefully planning an experience in advance” is exactly what happens when formulating a hypothesis about a phenomenon of interest and thinking about how to test it. “ Observing a phenomenon” occurs when testing a hypothesis, and “ explaining ” what is found is required when revising a hypothesis based on the data. Finally, “updating everyone’s thinking” comes from comparing publicly the original with the revised hypothesis.

Doing scientific inquiry, as we have defined it, underscores the value of accumulating knowledge rather than generating random bits of knowledge. Formulating, testing, and revising hypotheses is an ongoing process, with each revised hypothesis begging for another test, whether by the same researcher or by new researchers. The editors of Britannica signaled this cyclic process by adding the following phrase to their definition of the scientific method: “The modified hypothesis is then retested, further modified, and tested again.” Scientific inquiry creates a process that encourages each study to build on the studies that have gone before. Through collective engagement in this process of building study on top of study, the scientific community works together to update its thinking.

Before exploring more fully the meaning of “formulating, testing, and revising hypotheses,” we need to acknowledge that this is not the only way researchers define research. Some researchers prefer a less formal definition, one that includes more serendipity, less planning, less explanation. You might have come across more open definitions such as “research is finding out about something.” We prefer the tighter hypothesis formulation, testing, and revision definition because we believe it provides a single, coherent map for conducting research that addresses many of the thorny problems educational researchers encounter. We believe it is the most useful orientation toward research and the most helpful to learn as a beginning researcher.

A final clarification of our definition is that it applies equally to qualitative and quantitative research. This is a familiar distinction in education that has generated much discussion. You might think our definition favors quantitative methods over qualitative methods because the language of hypothesis formulation and testing is often associated with quantitative methods. In fact, we do not favor one method over another. In Chap. 4 , we will illustrate how our definition fits research using a range of quantitative and qualitative methods.

Exercise 1.4

Look for ways to extend what the field knows in an area that has already received attention by other researchers. Specifically, you can search for a program of research carried out by more experienced researchers that has some revised hypotheses that remain untested. Identify a revised hypothesis that you might like to test.

Unpacking the Terms Formulating, Testing, and Revising Hypotheses

To get a full sense of the definition of scientific inquiry we will use throughout this book, it is helpful to spend a little time with each of the key terms.

We first want to make clear that we use the term “hypothesis” as it is defined in most dictionaries and as it used in many scientific fields rather than as it is usually defined in educational statistics courses. By “hypothesis,” we do not mean a null hypothesis that is accepted or rejected by statistical analysis. Rather, we use “hypothesis” in the sense conveyed by the following definitions: “An idea or explanation for something that is based on known facts but has not yet been proved” (Cambridge University Press, n.d. ), and “An unproved theory, proposition, or supposition, tentatively accepted to explain certain facts and to provide a basis for further investigation or argument” (Agnes & Guralnik, 2008 ).

We distinguish two parts to “hypotheses.” Hypotheses consist of predictions and rationales . Predictions are statements about what you expect to find when you inquire about something. Rationales are explanations for why you made the predictions you did, why you believe your predictions are correct. So, for us “formulating hypotheses” means making explicit predictions and developing rationales for the predictions.

“Testing hypotheses” means making observations that allow you to assess in what ways your predictions were correct and in what ways they were incorrect. In education research, it is rarely useful to think of your predictions as either right or wrong. Because of the complexity of most issues you will investigate, most predictions will be right in some ways and wrong in others.

By studying the observations you make (data you collect) to test your hypotheses, you can revise your hypotheses to better align with the observations. This means revising your predictions plus revising your rationales to justify your adjusted predictions. Even though you might not run another test, formulating revised hypotheses is an essential part of conducting a research study. Comparing your original and revised hypotheses informs everyone of what you learned by conducting your study. In addition, a revised hypothesis sets the stage for you or someone else to extend your study and accumulate more knowledge of the phenomenon.

We should note that not everyone makes a clear distinction between predictions and rationales as two aspects of hypotheses. In fact, common, non-scientific uses of the word “hypothesis” may limit it to only a prediction or only an explanation (or rationale). We choose to explicitly include both prediction and rationale in our definition of hypothesis, not because we assert this should be the universal definition, but because we want to foreground the importance of both parts acting in concert. Using “hypothesis” to represent both prediction and rationale could hide the two aspects, but we make them explicit because they provide different kinds of information. It is usually easier to make predictions than develop rationales because predictions can be guesses, hunches, or gut feelings about which you have little confidence. Developing a compelling rationale requires careful thought plus reading what other researchers have found plus talking with your colleagues. Often, while you are developing your rationale you will find good reasons to change your predictions. Developing good rationales is the engine that drives scientific inquiry. Rationales are essentially descriptions of how much you know about the phenomenon you are studying. Throughout this guide, we will elaborate on how developing good rationales drives scientific inquiry. For now, we simply note that it can sharpen your predictions and help you to interpret your data as you test your hypotheses.

Hypotheses in education research take a variety of forms or types. This is because there are a variety of phenomena that can be investigated. Investigating educational phenomena is sometimes best done using qualitative methods, sometimes using quantitative methods, and most often using mixed methods (e.g., Hay, 2016 ; Weis et al. 2019a ; Weisner, 2005 ). This means that, given our definition, hypotheses are equally applicable to qualitative and quantitative investigations.

Hypotheses take different forms when they are used to investigate different kinds of phenomena. Two very different activities in education could be labeled conducting experiments and descriptions. In an experiment, a hypothesis makes a prediction about anticipated changes, say the changes that occur when a treatment or intervention is applied. You might investigate how students’ thinking changes during a particular kind of instruction.

A second type of hypothesis, relevant for descriptive research, makes a prediction about what you will find when you investigate and describe the nature of a situation. The goal is to understand a situation as it exists rather than to understand a change from one situation to another. In this case, your prediction is what you expect to observe. Your rationale is the set of reasons for making this prediction; it is your current explanation for why the situation will look like it does.

You will probably read, if you have not already, that some researchers say you do not need a prediction to conduct a descriptive study. We will discuss this point of view in Chap. 2 . For now, we simply claim that scientific inquiry, as we have defined it, applies to all kinds of research studies. Descriptive studies, like others, not only benefit from formulating, testing, and revising hypotheses, but also need hypothesis formulating, testing, and revising.

One reason we define research as formulating, testing, and revising hypotheses is that if you think of research in this way you are less likely to go wrong. It is a useful guide for the entire process, as we will describe in detail in the chapters ahead. For example, as you build the rationale for your predictions, you are constructing the theoretical framework for your study (Chap. 3 ). As you work out the methods you will use to test your hypothesis, every decision you make will be based on asking, “Will this help me formulate or test or revise my hypothesis?” (Chap. 4 ). As you interpret the results of testing your predictions, you will compare them to what you predicted and examine the differences, focusing on how you must revise your hypotheses (Chap. 5 ). By anchoring the process to formulating, testing, and revising hypotheses, you will make smart decisions that yield a coherent and well-designed study.

Exercise 1.5

Compare the concept of formulating, testing, and revising hypotheses with the descriptions of scientific inquiry contained in Scientific Research in Education (NRC, 2002 ). How are they similar or different?

Exercise 1.6

Provide an example to illustrate and emphasize the differences between everyday learning/thinking and scientific inquiry.

Learning from Doing Scientific Inquiry

We noted earlier that a measure of what you have learned by conducting a research study is found in the differences between your original hypothesis and your revised hypothesis based on the data you collected to test your hypothesis. We will elaborate this statement in later chapters, but we preview our argument here.

Even before collecting data, scientific inquiry requires cycles of making a prediction, developing a rationale, refining your predictions, reading and studying more to strengthen your rationale, refining your predictions again, and so forth. And, even if you have run through several such cycles, you still will likely find that when you test your prediction you will be partly right and partly wrong. The results will support some parts of your predictions but not others, or the results will “kind of” support your predictions. A critical part of scientific inquiry is making sense of your results by interpreting them against your predictions. Carefully describing what aspects of your data supported your predictions, what aspects did not, and what data fell outside of any predictions is not an easy task, but you cannot learn from your study without doing this analysis.

Analyzing the matches and mismatches between your predictions and your data allows you to formulate different rationales that would have accounted for more of the data. The best revised rationale is the one that accounts for the most data. Once you have revised your rationales, you can think about the predictions they best justify or explain. It is by comparing your original rationales to your new rationales that you can sort out what you learned from your study.

Suppose your study was an experiment. Maybe you were investigating the effects of a new instructional intervention on students’ learning. Your original rationale was your explanation for why the intervention would change the learning outcomes in a particular way. Your revised rationale explained why the changes that you observed occurred like they did and why your revised predictions are better. Maybe your original rationale focused on the potential of the activities if they were implemented in ideal ways and your revised rationale included the factors that are likely to affect how teachers implement them. By comparing the before and after rationales, you are describing what you learned—what you can explain now that you could not before. Another way of saying this is that you are describing how much more you understand now than before you conducted your study.

Revised predictions based on carefully planned and collected data usually exhibit some of the following features compared with the originals: more precision, more completeness, and broader scope. Revised rationales have more explanatory power and become more complete, more aligned with the new predictions, sharper, and overall more convincing.

Part II. Why Do Educators Do Research?

Doing scientific inquiry is a lot of work. Each phase of the process takes time, and you will often cycle back to improve earlier phases as you engage in later phases. Because of the significant effort required, you should make sure your study is worth it. So, from the beginning, you should think about the purpose of your study. Why do you want to do it? And, because research is a social practice, you should also think about whether the results of your study are likely to be important and significant to the education community.

If you are doing research in the way we have described—as scientific inquiry—then one purpose of your study is to understand , not just to describe or evaluate or report. As we noted earlier, when you formulate hypotheses, you are developing rationales that explain why things might be like they are. In our view, trying to understand and explain is what separates research from other kinds of activities, like evaluating or describing.

One reason understanding is so important is that it allows researchers to see how or why something works like it does. When you see how something works, you are better able to predict how it might work in other contexts, under other conditions. And, because conditions, or contextual factors, matter a lot in education, gaining insights into applying your findings to other contexts increases the contributions of your work and its importance to the broader education community.

Consequently, the purposes of research studies in education often include the more specific aim of identifying and understanding the conditions under which the phenomena being studied work like the observations suggest. A classic example of this kind of study in mathematics education was reported by William Brownell and Harold Moser in 1949 . They were trying to establish which method of subtracting whole numbers could be taught most effectively—the regrouping method or the equal additions method. However, they realized that effectiveness might depend on the conditions under which the methods were taught—“meaningfully” versus “mechanically.” So, they designed a study that crossed the two instructional approaches with the two different methods (regrouping and equal additions). Among other results, they found that these conditions did matter. The regrouping method was more effective under the meaningful condition than the mechanical condition, but the same was not true for the equal additions algorithm.

What do education researchers want to understand? In our view, the ultimate goal of education is to offer all students the best possible learning opportunities. So, we believe the ultimate purpose of scientific inquiry in education is to develop understanding that supports the improvement of learning opportunities for all students. We say “ultimate” because there are lots of issues that must be understood to improve learning opportunities for all students. Hypotheses about many aspects of education are connected, ultimately, to students’ learning. For example, formulating and testing a hypothesis that preservice teachers need to engage in particular kinds of activities in their coursework in order to teach particular topics well is, ultimately, connected to improving students’ learning opportunities. So is hypothesizing that school districts often devote relatively few resources to instructional leadership training or hypothesizing that positioning mathematics as a tool students can use to combat social injustice can help students see the relevance of mathematics to their lives.

We do not exclude the importance of research on educational issues more removed from improving students’ learning opportunities, but we do think the argument for their importance will be more difficult to make. If there is no way to imagine a connection between your hypothesis and improving learning opportunities for students, even a distant connection, we recommend you reconsider whether it is an important hypothesis within the education community.

Notice that we said the ultimate goal of education is to offer all students the best possible learning opportunities. For too long, educators have been satisfied with a goal of offering rich learning opportunities for lots of students, sometimes even for just the majority of students, but not necessarily for all students. Evaluations of success often are based on outcomes that show high averages. In other words, if many students have learned something, or even a smaller number have learned a lot, educators may have been satisfied. The problem is that there is usually a pattern in the groups of students who receive lower quality opportunities—students of color and students who live in poor areas, urban and rural. This is not acceptable. Consequently, we emphasize the premise that the purpose of education research is to offer rich learning opportunities to all students.

One way to make sure you will be able to convince others of the importance of your study is to consider investigating some aspect of teachers’ shared instructional problems. Historically, researchers in education have set their own research agendas, regardless of the problems teachers are facing in schools. It is increasingly recognized that teachers have had trouble applying to their own classrooms what researchers find. To address this problem, a researcher could partner with a teacher—better yet, a small group of teachers—and talk with them about instructional problems they all share. These discussions can create a rich pool of problems researchers can consider. If researchers pursued one of these problems (preferably alongside teachers), the connection to improving learning opportunities for all students could be direct and immediate. “Grounding a research question in instructional problems that are experienced across multiple teachers’ classrooms helps to ensure that the answer to the question will be of sufficient scope to be relevant and significant beyond the local context” (Cai et al., 2019b , p. 115).

As a beginning researcher, determining the relevance and importance of a research problem is especially challenging. We recommend talking with advisors, other experienced researchers, and peers to test the educational importance of possible research problems and topics of study. You will also learn much more about the issue of research importance when you read Chap. 5 .

Exercise 1.7

Identify a problem in education that is closely connected to improving learning opportunities and a problem that has a less close connection. For each problem, write a brief argument (like a logical sequence of if-then statements) that connects the problem to all students’ learning opportunities.

Part III. Conducting Research as a Practice of Failing Productively

Scientific inquiry involves formulating hypotheses about phenomena that are not fully understood—by you or anyone else. Even if you are able to inform your hypotheses with lots of knowledge that has already been accumulated, you are likely to find that your prediction is not entirely accurate. This is normal. Remember, scientific inquiry is a process of constantly updating your thinking. More and better information means revising your thinking, again, and again, and again. Because you never fully understand a complicated phenomenon and your hypotheses never produce completely accurate predictions, it is easy to believe you are somehow failing.

The trick is to fail upward, to fail to predict accurately in ways that inform your next hypothesis so you can make a better prediction. Some of the best-known researchers in education have been open and honest about the many times their predictions were wrong and, based on the results of their studies and those of others, they continuously updated their thinking and changed their hypotheses.

A striking example of publicly revising (actually reversing) hypotheses due to incorrect predictions is found in the work of Lee J. Cronbach, one of the most distinguished educational psychologists of the twentieth century. In 1955, Cronbach delivered his presidential address to the American Psychological Association. Titling it “Two Disciplines of Scientific Psychology,” Cronbach proposed a rapprochement between two research approaches—correlational studies that focused on individual differences and experimental studies that focused on instructional treatments controlling for individual differences. (We will examine different research approaches in Chap. 4 ). If these approaches could be brought together, reasoned Cronbach ( 1957 ), researchers could find interactions between individual characteristics and treatments (aptitude-treatment interactions or ATIs), fitting the best treatments to different individuals.

In 1975, after years of research by many researchers looking for ATIs, Cronbach acknowledged the evidence for simple, useful ATIs had not been found. Even when trying to find interactions between a few variables that could provide instructional guidance, the analysis, said Cronbach, creates “a hall of mirrors that extends to infinity, tormenting even the boldest investigators and defeating even ambitious designs” (Cronbach, 1975 , p. 119).

As he was reflecting back on his work, Cronbach ( 1986 ) recommended moving away from documenting instructional effects through statistical inference (an approach he had championed for much of his career) and toward approaches that probe the reasons for these effects, approaches that provide a “full account of events in a time, place, and context” (Cronbach, 1986 , p. 104). This is a remarkable change in hypotheses, a change based on data and made fully transparent. Cronbach understood the value of failing productively.

Closer to home, in a less dramatic example, one of us began a line of scientific inquiry into how to prepare elementary preservice teachers to teach early algebra. Teaching early algebra meant engaging elementary students in early forms of algebraic reasoning. Such reasoning should help them transition from arithmetic to algebra. To begin this line of inquiry, a set of activities for preservice teachers were developed. Even though the activities were based on well-supported hypotheses, they largely failed to engage preservice teachers as predicted because of unanticipated challenges the preservice teachers faced. To capitalize on this failure, follow-up studies were conducted, first to better understand elementary preservice teachers’ challenges with preparing to teach early algebra, and then to better support preservice teachers in navigating these challenges. In this example, the initial failure was a necessary step in the researchers’ scientific inquiry and furthered the researchers’ understanding of this issue.

We present another example of failing productively in Chap. 2 . That example emerges from recounting the history of a well-known research program in mathematics education.

Making mistakes is an inherent part of doing scientific research. Conducting a study is rarely a smooth path from beginning to end. We recommend that you keep the following things in mind as you begin a career of conducting research in education.

First, do not get discouraged when you make mistakes; do not fall into the trap of feeling like you are not capable of doing research because you make too many errors.

Second, learn from your mistakes. Do not ignore your mistakes or treat them as errors that you simply need to forget and move past. Mistakes are rich sites for learning—in research just as in other fields of study.

Third, by reflecting on your mistakes, you can learn to make better mistakes, mistakes that inform you about a productive next step. You will not be able to eliminate your mistakes, but you can set a goal of making better and better mistakes.

Exercise 1.8

How does scientific inquiry differ from everyday learning in giving you the tools to fail upward? You may find helpful perspectives on this question in other resources on science and scientific inquiry (e.g., Failure: Why Science is So Successful by Firestein, 2015).

Exercise 1.9

Use what you have learned in this chapter to write a new definition of scientific inquiry. Compare this definition with the one you wrote before reading this chapter. If you are reading this book as part of a course, compare your definition with your colleagues’ definitions. Develop a consensus definition with everyone in the course.

Part IV. Preview of Chap. 2

Now that you have a good idea of what research is, at least of what we believe research is, the next step is to think about how to actually begin doing research. This means how to begin formulating, testing, and revising hypotheses. As for all phases of scientific inquiry, there are lots of things to think about. Because it is critical to start well, we devote Chap. 2 to getting started with formulating hypotheses.

Agnes, M., & Guralnik, D. B. (Eds.). (2008). Hypothesis. In Webster’s new world college dictionary (4th ed.). Wiley.

Google Scholar

Britannica. (n.d.). Scientific method. In Encyclopaedia Britannica . Retrieved July 15, 2022 from https://www.britannica.com/science/scientific-method

Brownell, W. A., & Moser, H. E. (1949). Meaningful vs. mechanical learning: A study in grade III subtraction . Duke University Press..

Cai, J., Morris, A., Hohensee, C., Hwang, S., Robison, V., Cirillo, M., Kramer, S. L., & Hiebert, J. (2019b). Posing significant research questions. Journal for Research in Mathematics Education, 50 (2), 114–120. https://doi.org/10.5951/jresematheduc.50.2.0114

Article Google Scholar

Cambridge University Press. (n.d.). Hypothesis. In Cambridge dictionary . Retrieved July 15, 2022 from https://dictionary.cambridge.org/us/dictionary/english/hypothesis

Cronbach, J. L. (1957). The two disciplines of scientific psychology. American Psychologist, 12 , 671–684.

Cronbach, L. J. (1975). Beyond the two disciplines of scientific psychology. American Psychologist, 30 , 116–127.

Cronbach, L. J. (1986). Social inquiry by and for earthlings. In D. W. Fiske & R. A. Shweder (Eds.), Metatheory in social science: Pluralisms and subjectivities (pp. 83–107). University of Chicago Press.

Hay, C. M. (Ed.). (2016). Methods that matter: Integrating mixed methods for more effective social science research . University of Chicago Press.

Merriam-Webster. (n.d.). Explain. In Merriam-Webster.com dictionary . Retrieved July 15, 2022, from https://www.merriam-webster.com/dictionary/explain

National Research Council. (2002). Scientific research in education . National Academy Press.

Weis, L., Eisenhart, M., Duncan, G. J., Albro, E., Bueschel, A. C., Cobb, P., Eccles, J., Mendenhall, R., Moss, P., Penuel, W., Ream, R. K., Rumbaut, R. G., Sloane, F., Weisner, T. S., & Wilson, J. (2019a). Mixed methods for studies that address broad and enduring issues in education research. Teachers College Record, 121 , 100307.

Weisner, T. S. (Ed.). (2005). Discovering successful pathways in children’s development: Mixed methods in the study of childhood and family life . University of Chicago Press.

Download references

Author information

Authors and affiliations.

School of Education, University of Delaware, Newark, DE, USA

James Hiebert, Anne K Morris & Charles Hohensee

Department of Mathematical Sciences, University of Delaware, Newark, DE, USA

Jinfa Cai & Stephen Hwang

You can also search for this author in PubMed Google Scholar

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Reprints and permissions

Copyright information

© 2023 The Author(s)

About this chapter

Hiebert, J., Cai, J., Hwang, S., Morris, A.K., Hohensee, C. (2023). What Is Research, and Why Do People Do It?. In: Doing Research: A New Researcher’s Guide. Research in Mathematics Education. Springer, Cham. https://doi.org/10.1007/978-3-031-19078-0_1

Download citation

DOI : https://doi.org/10.1007/978-3-031-19078-0_1

Published : 03 December 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-19077-3

Online ISBN : 978-3-031-19078-0

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

TODAY'S HOURS:

Research Process

- Select a Topic

- Find Background Info

- Focus Topic

- List Keywords

- Search for Sources

- Evaluate & Integrate Sources

- Cite and Track Sources

What is Scientific Research?

Research study design, natural vs. social science, qualitative vs. quantitative research, more information on qualitative research in the social sciences, acknowledgements.

Thank you to Julie Miller, reference intern, for helping to create this page.

Some people use the term research loosely, for example:

- People will say they are researching different online websites to find the best place to buy a new appliance or locate a lawn care service.

- TV news may talk about conducting research when they conduct a viewer poll on current event topic such as an upcoming election.

- Undergraduate students working on a term paper or project may say they are researching the internet to find information.

- Private sector companies may say they are conducting research to find a solution for a supply chain holdup.

However, none of the above is considered “scientific research” unless:

- The research contributes to a body of science by providing new information through ethical study design or

- The research follows the scientific method, an iterative process of observation and inquiry.

The Scientific Method

- Make an observation: notice a phenomenon in your life or in society or find a gap in the already published literature.

- Ask a question about what you have observed.

- Hypothesize about a potential answer or explanation.

- Make predictions if our hypothesis is correct.

- Design an experiment or study that will test your prediction.

- Test the prediction by conducting an experiment or study; report the outcomes of your study.

- Iterate! Was your prediction correct? Was the outcome unexpected? Did it lead to new observations?

The scientific method is not separate from the Research Process as described in the rest of this guide, in fact the Research Process is directly related to the observation stage of the scientific method. Understanding what other scientists and researchers have already studied will help you focus your area of study and build on their knowledge.

Designing your experiment or study is important for both natural and social scientists. Sage Research Methods (SRM) has an excellent "Project Planner" that guides you through the basic stages of research design. SRM also has excellent explanations of qualitative and quantitative research methods for the social sciences.

For the natural sciences, Springer Nature Experiments and Protocol Exchange have guidance on quantitative research methods.

Books, journals, reference books, videos, podcasts, data-sets, and case studies on social science research methods.

Sage Research Methods includes over 2,000 books, reference books, journal articles, videos, datasets, and case studies on all aspects of social science research methodology. Browse the methods map or the list of methods to identify a social science method to pursue further. Includes a project planning tool and the "Which Stats Test" tool to identify the best statistical method for your project. Includes the notable "little green book" series (Quantitative Applications in the Social Sciences) and the "little blue book" series (Qualitative Research Methods).

Platform connecting researchers with protocols and methods.

Springer Nature Experiments has been designed to help users/researchers find and evaluate relevant protocols and methods across the whole Springer Nature protocols and methods portfolio using one search. This database includes:

- Nature Protocols

- Nature Reviews Methods Primers

- Nature Methods

- Springer Protocols

Open repository for sharing scientific research protocols. These protocols are posted directly on the Protocol Exchange by authors and are made freely available to the scientific community for use and comment.

Includes these topics:

- Biochemistry

- Biological techniques

- Chemical biology

- Chemical engineering

- Cheminformatics

- Climate science

- Computational biology and bioinformatics

- Drug discovery

- Electronics

- Energy sciences

- Environmental sciences

- Materials science

- Molecular biology

- Molecular medicine

- Neuroscience

- Organic chemistry

- Planetary science

| Natural Science | Social Science | |

|---|---|---|

| Definition | The natural sciences are very precise, accurate, and independent of the person making the scientific observation. | The science of people or collections of people and their human activity and interactivity. |

| Example Disciplines | : astronomy, chemistry, engineering, physics : geology biology, botany, medicine | |

| Example experiments |

Qualitative research is primarily exploratory. It is used to gain an understanding of underlying reasons, opinions, and motivations. Qualitative research is also used to uncover trends in thought and opinions and to dive deeper into a problem by studying an individual or a group.

Qualitative methods usually use unstructured or semi-structured techniques. The sample size is typically smaller than in quantitative research.

Example: interviews and focus groups.

Quantitative research is characterized by the gathering of data with the aim of testing a hypothesis. The data generated are numerical, or, if not numerical, can be transformed into useable statistics.

Quantitative data collection methods are more structured than qualitative data collection methods and sample sizes are usually larger.

Example: survey

Note: The above descriptions of qualitative and quantitative research are mainly for research in the Social Sciences, rather than for Natural Sciences as most natural sciences rely on quantitative methods for their experiments.

Qualitative research is approaching the world in its natural setting and in a way that reveals the particularities rather than doing studies in a controlled setting. It aims to understand, describe, and sometimes explain social phenomena in a number of different ways:

- Experiences of individuals or groups

- Interactions and communications

- Documents (texts, images, film, or sounds, and digital documents)

- Experiences or interactions

Qualitative researchers seek to understand how people conceptualize the world around them, what they are doing, how they are doing it or what is happening to them in terms that are significant and that offer meaningful learnings.

Qualitative researchers develop and refine concepts (or hypotheses, if they are used) in the process of research and of collecting data. Cases (its history and complexity) are an important context for understanding the issue that is studied. A major part of qualitative research is based on text and writing – from field notes and transcripts to descriptions and interpretations and finally to the presentation of the findings and of the research as a whole.

For more information, see:

- << Previous: Cite and Track Sources

- Last Updated: Aug 12, 2024 2:59 PM

- URL: https://libguides.umflint.edu/research

- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- Games & Quizzes

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

scientific method

Our editors will review what you’ve submitted and determine whether to revise the article.

- University of Nevada, Reno - College of Agriculture, Biotechnology and Natural Resources Extension - The Scientific Method

- World History Encyclopedia - Scientific Method

- LiveScience - What Is Science?

- Verywell Mind - Scientific Method Steps in Psychology Research

- WebMD - What is the Scientific Method?

- Chemistry LibreTexts - The Scientific Method

- National Center for Biotechnology Information - PubMed Central - Redefining the scientific method: as the use of sophisticated scientific methods that extend our mind

- Khan Academy - The scientific method

- Simply Psychology - What are the steps in the Scientific Method?

- Stanford Encyclopedia of Philosophy - Scientific Method

scientific method , mathematical and experimental technique employed in the sciences . More specifically, it is the technique used in the construction and testing of a scientific hypothesis .

The process of observing, asking questions, and seeking answers through tests and experiments is not unique to any one field of science. In fact, the scientific method is applied broadly in science, across many different fields. Many empirical sciences, especially the social sciences , use mathematical tools borrowed from probability theory and statistics , together with outgrowths of these, such as decision theory , game theory , utility theory, and operations research . Philosophers of science have addressed general methodological problems, such as the nature of scientific explanation and the justification of induction .

The scientific method is critical to the development of scientific theories , which explain empirical (experiential) laws in a scientifically rational manner. In a typical application of the scientific method, a researcher develops a hypothesis , tests it through various means, and then modifies the hypothesis on the basis of the outcome of the tests and experiments. The modified hypothesis is then retested, further modified, and tested again, until it becomes consistent with observed phenomena and testing outcomes. In this way, hypotheses serve as tools by which scientists gather data. From that data and the many different scientific investigations undertaken to explore hypotheses, scientists are able to develop broad general explanations, or scientific theories.

See also Mill’s methods ; hypothetico-deductive method .

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

1 Science and scientific research

What is research? Depending on who you ask, you will likely get very different answers to this seemingly innocuous question. Some people will say that they routinely research different online websites to find the best place to buy the goods or services they want. Television news channels supposedly conduct research in the form of viewer polls on topics of public interest such as forthcoming elections or government-funded projects. Undergraduate students research on the Internet to find the information they need to complete assigned projects or term papers. Postgraduate students working on research projects for a professor may see research as collecting or analysing data related to their project. Businesses and consultants research different potential solutions to remedy organisational problems such as a supply chain bottleneck or to identify customer purchase patterns. However, none of the above can be considered ‘scientific research’ unless: it contributes to a body of science, and it follows the scientific method. This chapter will examine what these terms mean.

What is science? To some, science refers to difficult high school or university-level courses such as physics, chemistry, and biology meant only for the brightest students. To others, science is a craft practiced by scientists in white coats using specialised equipment in their laboratories. Etymologically, the word ‘science’ is derived from the Latin word scientia meaning knowledge. Science refers to a systematic and organised body of knowledge in any area of inquiry that is acquired using ‘the scientific method’ (the scientific method is described further below). Science can be grouped into two broad categories: natural science and social science. Natural science is the science of naturally occurring objects or phenomena, such as light, objects, matter, earth, celestial bodies, or the human body. Natural sciences can be further classified into physical sciences, earth sciences, life sciences, and others. Physical sciences consist of disciplines such as physics (the science of physical objects), chemistry (the science of matter), and astronomy (the science of celestial objects). Earth sciences consist of disciplines such as geology (the science of the earth). Life sciences include disciplines such as biology (the science of human bodies) and botany (the science of plants). In contrast, social science is the science of people or collections of people, such as groups, firms, societies, or economies, and their individual or collective behaviours. Social sciences can be classified into disciplines such as psychology (the science of human behaviours), sociology (the science of social groups), and economics (the science of firms, markets, and economies).

The natural sciences are different from the social sciences in several respects. The natural sciences are very precise, accurate, deterministic, and independent of the person making the scientific observations. For instance, a scientific experiment in physics, such as measuring the speed of sound through a certain media or the refractive index of water, should always yield the exact same results, irrespective of the time or place of the experiment, or the person conducting the experiment. If two students conducting the same physics experiment obtain two different values of these physical properties, then it generally means that one or both of those students must be in error. However, the same cannot be said for the social sciences, which tend to be less accurate, deterministic, or unambiguous. For instance, if you measure a person’s happiness using a hypothetical instrument, you may find that the same person is more happy or less happy (or sad) on different days and sometimes, at different times on the same day. One’s happiness may vary depending on the news that person received that day or on the events that transpired earlier during that day. Furthermore, there is not a single instrument or metric that can accurately measure a person’s happiness. Hence, one instrument may calibrate a person as being ‘more happy’ while a second instrument may find that the same person is ‘less happy’ at the same instant in time. In other words, there is a high degree of measurement error in the social sciences and there is considerable uncertainty and little agreement on social science policy decisions. For instance, you will not find many disagreements among natural scientists on the speed of light or the speed of the earth around the sun, but you will find numerous disagreements among social scientists on how to solve a social problem such as reduce global terrorism or rescue an economy from a recession. Any student studying the social sciences must be cognisant of and comfortable with handling higher levels of ambiguity, uncertainty, and error that come with such sciences, which merely reflects the high variability of social objects.

Sciences can also be classified based on their purpose. Basic sciences , also called pure sciences, are those that explain the most basic objects and forces, relationships between them, and laws governing them. Examples include physics, mathematics, and biology. Applied sciences , also called practical sciences, are sciences that apply scientific knowledge from basic sciences in a physical environment. For instance, engineering is an applied science that applies the laws of physics and chemistry for practical applications such as building stronger bridges or fuel efficient combustion engines, while medicine is an applied science that applies the laws of biology to solving human ailments. Both basic and applied sciences are required for human development. However, applied science cannot stand on its own right, but instead relies on basic sciences for its progress. Of course, industry and private enterprises tend to focus more on applied sciences given their practical value, while universities study both basic and applied sciences.

Scientific knowledge